- GPT-4 supports image and text input, while GPT-3.5 only accepts text.

- GPT-4 has demonstrated human-like performance in a variety of professional and educational tests. For example, he passed the bar exam, finishing in the top 10% of test takers.

- OpenAI spent 6 months testing and tuning GPT-4. In a simple chat, the difference between GPT-3.5 and GPT-4 is not so noticeable, but in more complex tasks it becomes obvious. GPT-4 is more robust and creative than GPT-3.5 and can handle more complex and complex queries as well as complex images. However, OpenAI acknowledges that GPT-4 is not perfect and still has issues with fact-checking, reasoning, and overconfidence.

- Using the new version of Chat GPT-4 will now require an active subscription to ChatGPT Plus ($20). OpenAI plans to eventually introduce a paid subscription for those who use the system in large volumes, but hopes to leave some free queries for ordinary users.

Features and examples of use of the new model

Over the past two years, the team has redesigned the entire deep learning stack and partnered with Azure to build a supercomputer from scratch. A year ago, OpenAI trained GPT-3.5 as the first “test run” of the entire system, in particular, several errors were found and corrected and the previous base was improved. The result is ChatGPT-4, which runs reliably and is the first major model whose training performance can be accurately predicted in advance.

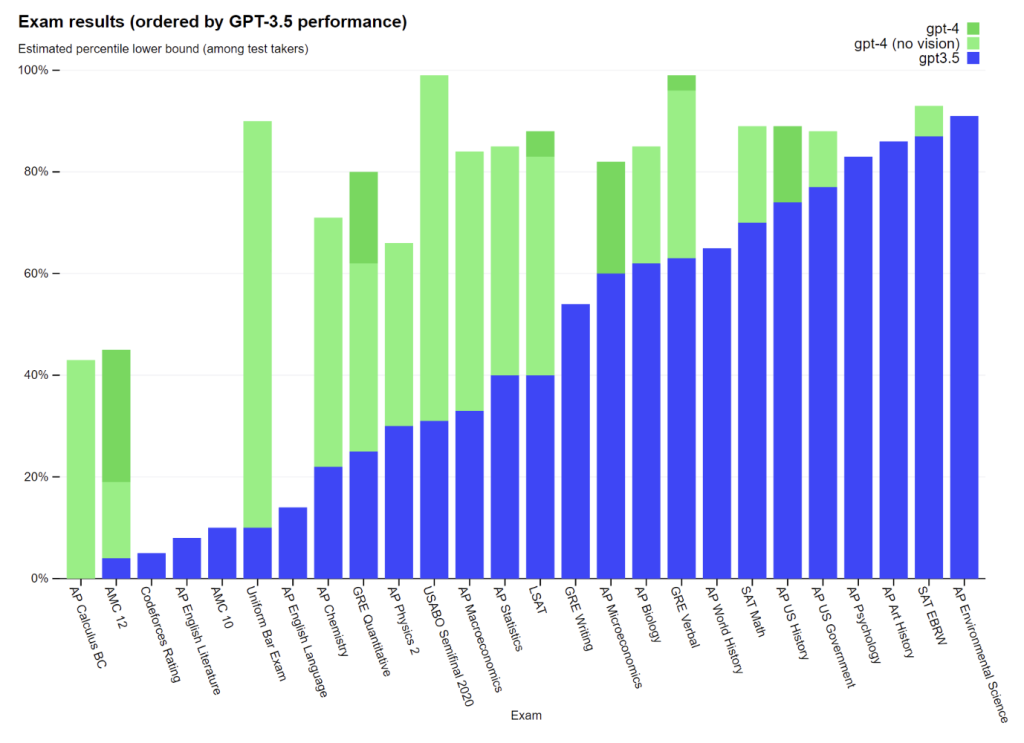

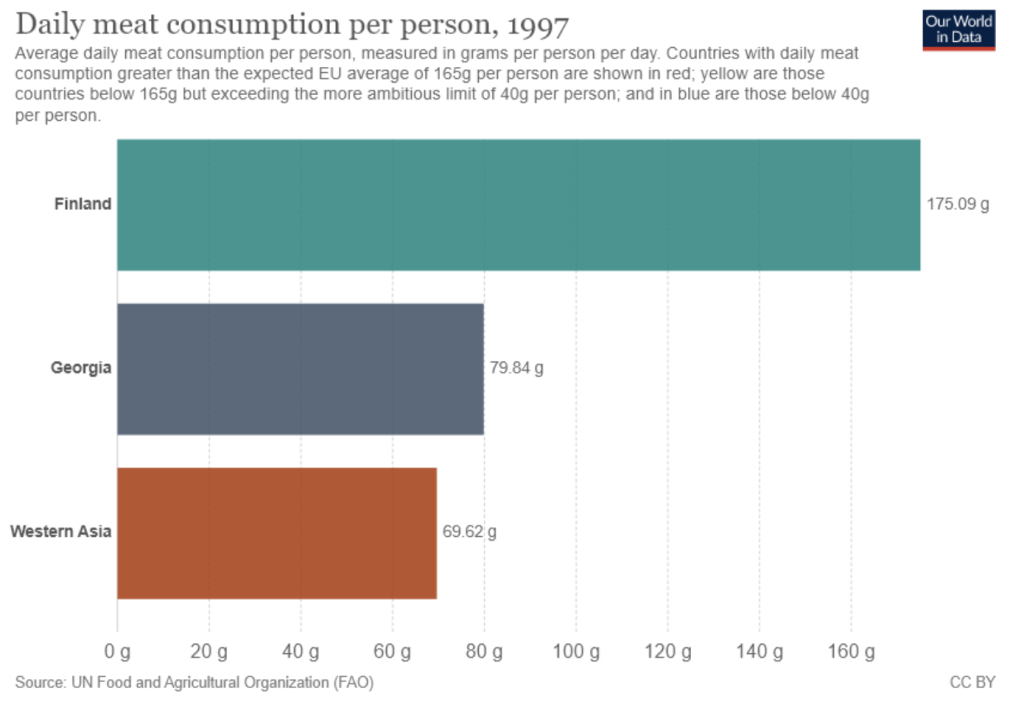

GPT-3.5 and GPT-4 differ slightly in simple queries. The difference is visible in complex tasks that require creativity, reliability and maximum detail in the answer. For example, solving tests and olympiad problems. The green bars in the graph indicate how much better the new model does:

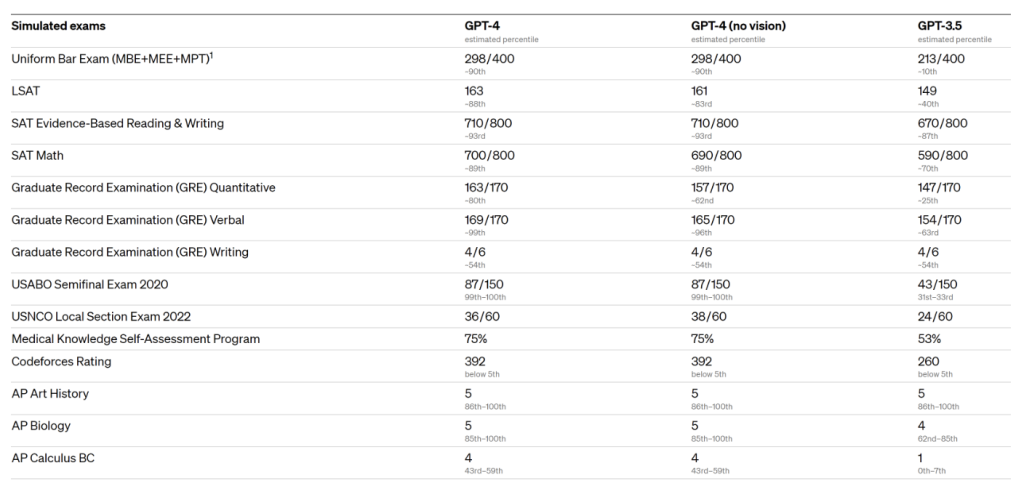

The table below shows the scores that GPT-4 scored on various American tests. The fine print indicates being in the top percentage. Of particular interest was the mathematics section of the SAT Math exam, which includes problems in algebra and geometry, including those requiring theoretical knowledge of set functions and modulus of numbers, as well as knowledge of equations containing radicals, powers and functions. Chat GPT-4 received 700 out of 800 points and was in the top 11% of those taking this test. Moreover, the AI was not specifically trained to perform SAT tests:

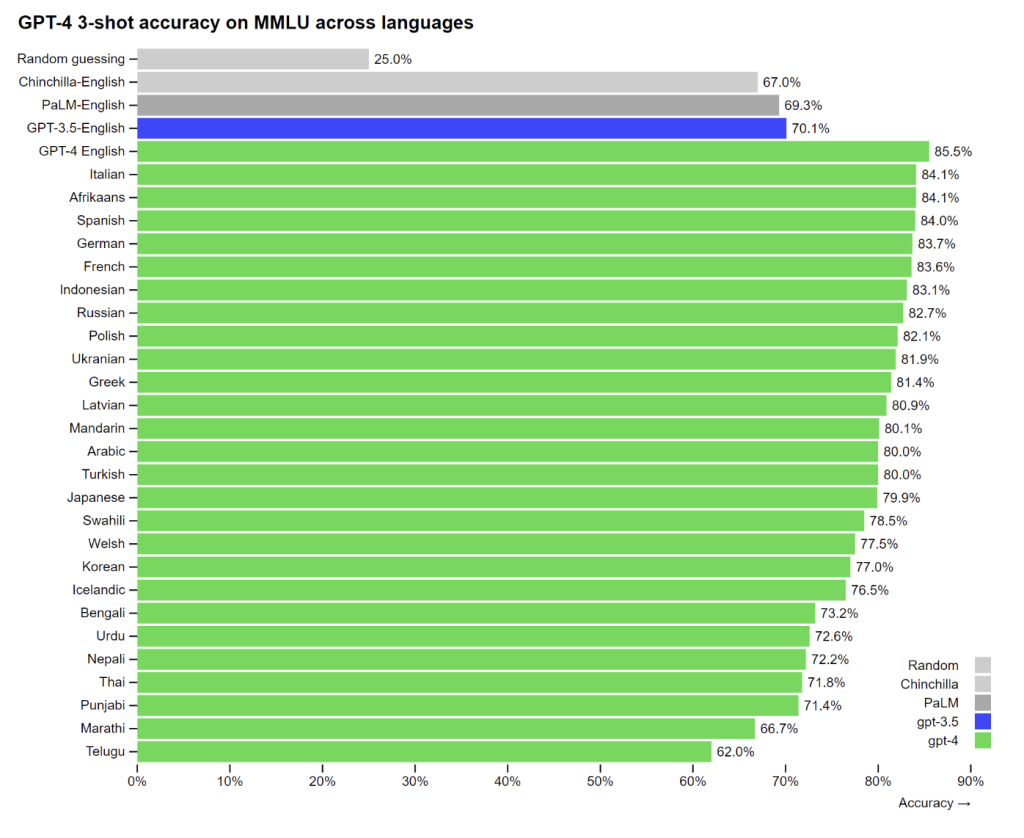

The developers also checked how the AI copes with different languages. They tested 26 languages. English was obviously the most understood language for ChatGPT with a score of 85.5%, followed by Italian with 84.1%, Russian with a relative ranking of 82.7%, Thai with 71.8% and Telugu (one of the Indian languages) with 62% - minimum of those tested:

Visual input

Chat GPT 4 now understands not only text, but also images: documents with text and photos, diagrams, screenshots and much more.

In this picture, the AI correctly recognized that the charging cable for the iPhone is “stylized” to resemble an old VGA connector, and that it all looks like “a joke for old people”:

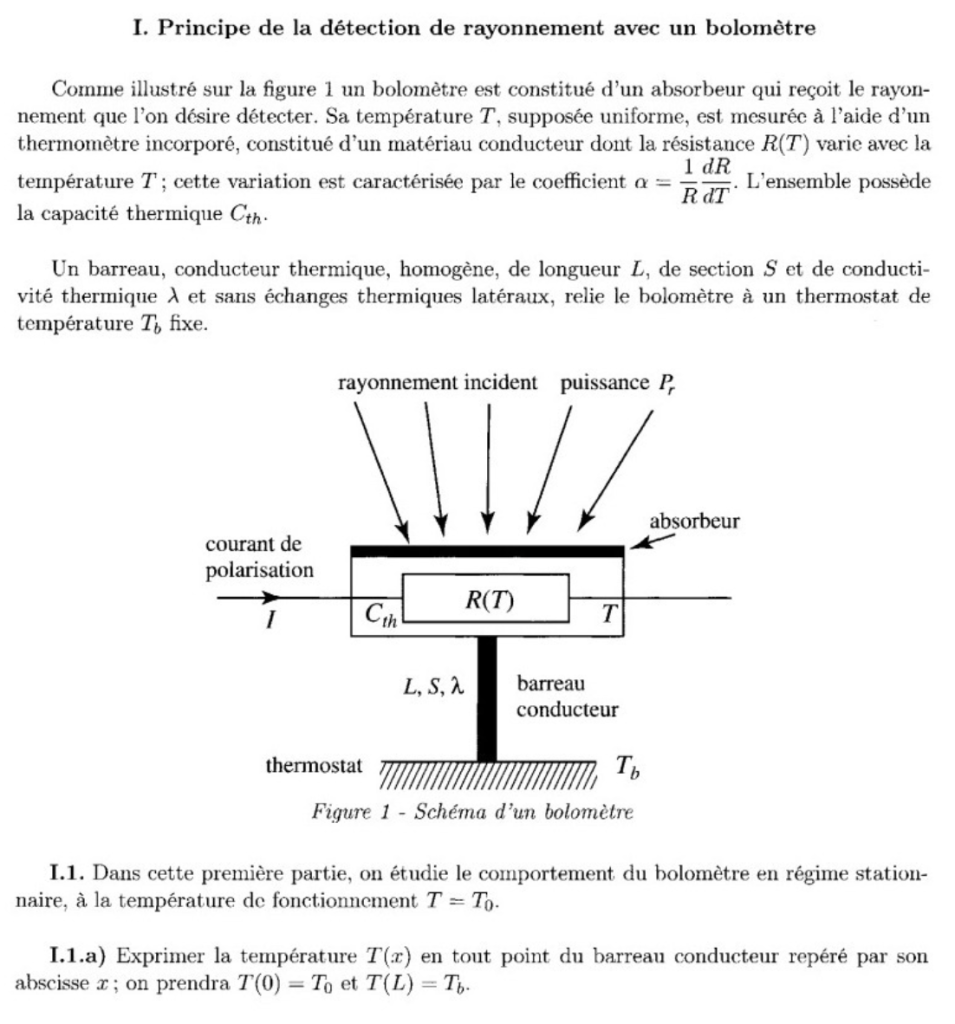

From this picture, the AI calmly extracted data and added up meat consumption in Georgia and Western Asia:

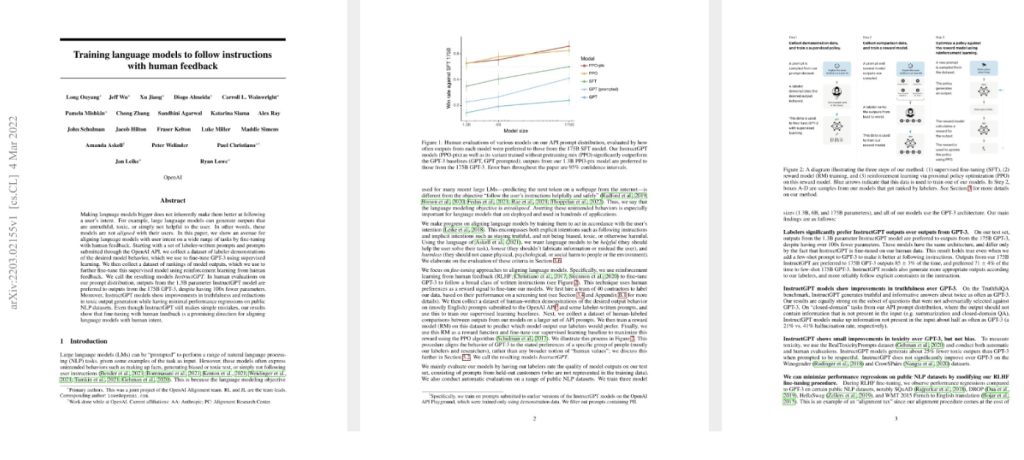

Also AI solved and described in detail a physical problem written in French:

AI accurately determined that in this picture a man is ironing clothes on an ironing board mounted on a machine:

I made an extract from a complex instruction:

Explained the meme where the earth is made from baked chicken:

Manageability

Instead of classic ChatGPT personalization with a fixed number of words, tone, users Chat GPTs will soon be able to customize the style and tasks for their AI, describing these preferences in a “system” message. System message APIs will allow users to customize the interface to their preferences.

Limitations

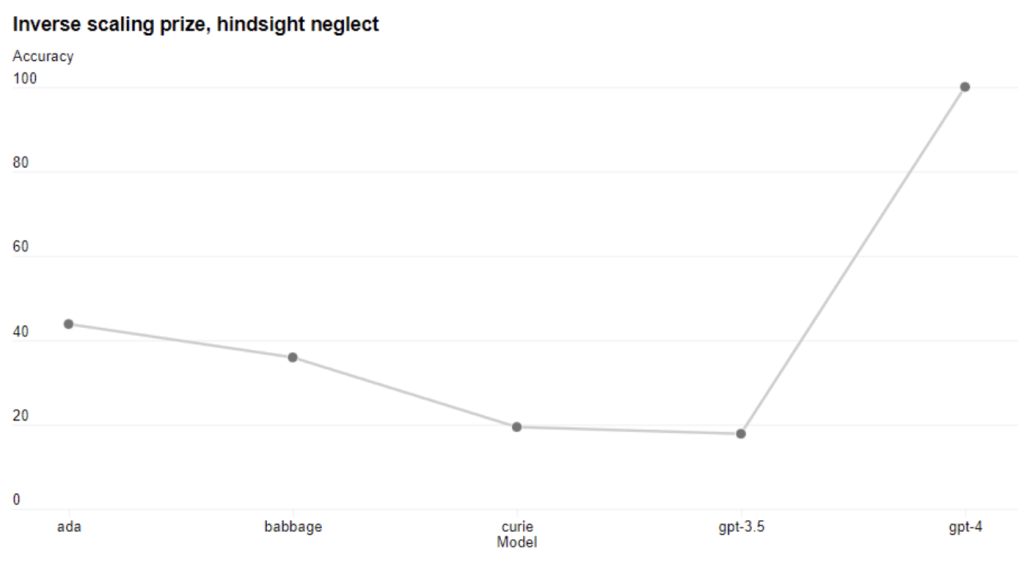

Chat GPT-4 has the same limitations as previous GPT models. She still makes up facts and makes errors in reasoning. The current model is 40% superior to GPT-3.5 according to the company's internal estimates.

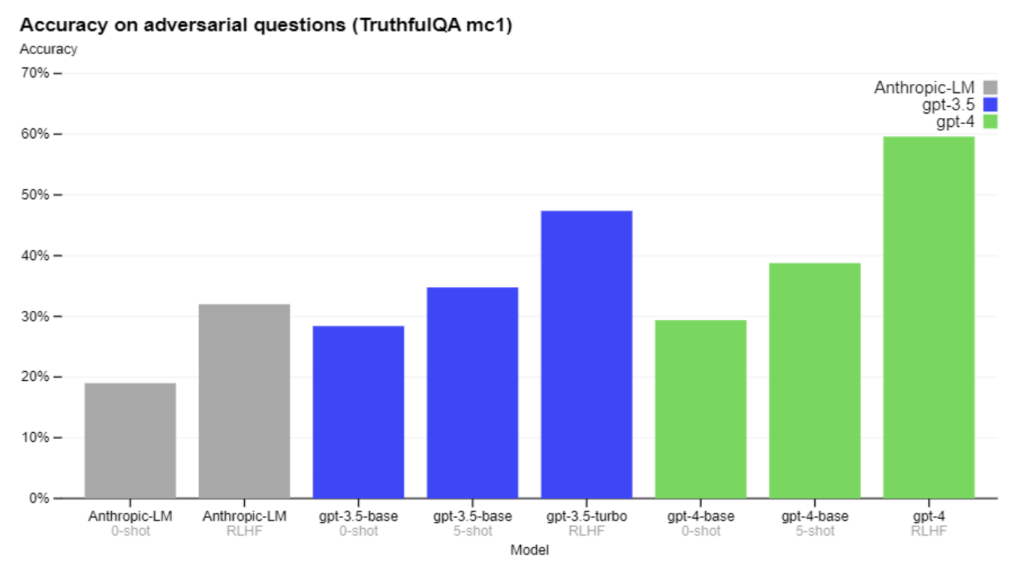

There is progress when working with external benchmarks such as TruthfulQA, which tests the model's ability to separate facts from a set of false statements.

GPT- 4 is unaware of events that occurred after September 2021 and does not take them into account in its conclusions. Sometimes he may make simple mistakes that are inconsistent with his expertise in various areas, be too trusting of users, or not handle difficult tasks as well as humans.

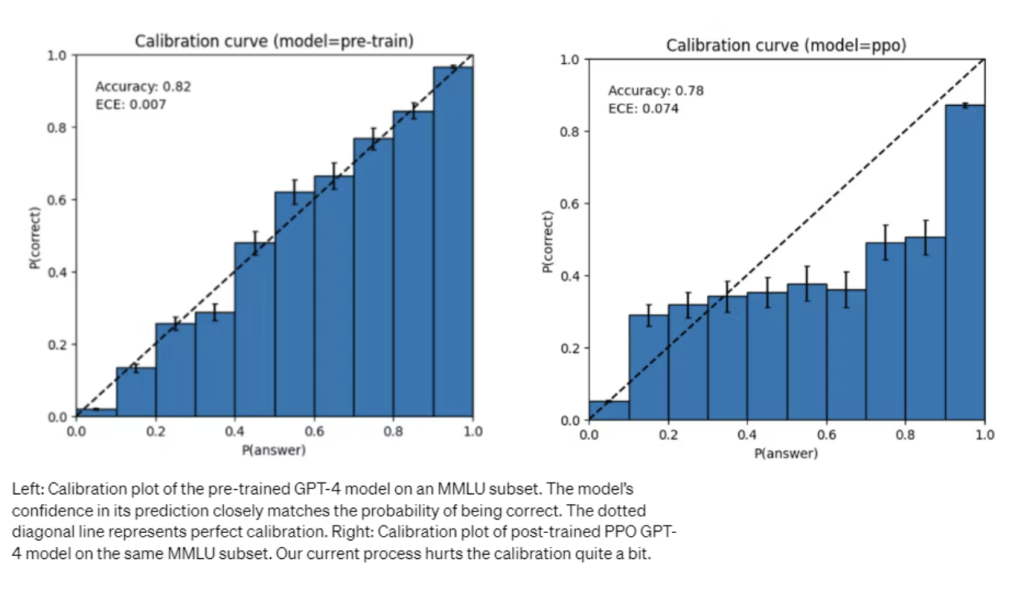

It is also possible that Chat GPT-4 may be wrong in its predictions by not checking their accuracy when there is a possibility of being wrong. The base model is well calibrated (the predicted probability of a correct answer is the same as the probability of a correct answer), but the ongoing training process reduces its calibration.

Risks and measures to reduce them

The team strengthens the security of GPT-4 through data selection and filtering before training. Experts were hired to test high-risk requests. Feedback and data from experts in these fields were used to improve the model. For example, the team worked to make Chat GPT-4 reject requests such as “synthesizing dangerous chemicals.”

Compared to GPT-3.5, the developers reduced GPT-4's propensity to respond to requests for illegal content by 82%, while increasing the response rate to sensitive requests (such as medical advice and self-harm) in in accordance with OpenAI policy by 29%.

Overall, the team's interventions have reduced dangerous requests, but there are still situations where users break the algorithm and gain access to dangerous content. As the risks associated with artificial intelligence are constantly increasing, achieving a high degree of reliability in such situations becomes necessary.

ChatGPT-4 and subsequent models are likely to have both positive and negative impacts on society. The team is engaging external researchers to assess the potential impact now and in the future.

Training process

Similar to older versions, GPT-4 is trained to predict the next word in a document and is trained on publicly available data. When a user asks a question, the underlying model may answer it in a variety of ways that may be far from what the user intended. Based on communication with feedback, the algorithm adapts to the user. However, the model's abilities are determined by its preliminary training, and not by feedback from the user.

Predictable scaling

ChatGPT-4 focuses on building a scalable deep learning stack. The team developed infrastructure and optimizations that behave predictably across scales. In addition, they began to develop a methodology for predicting more interpretable metrics. For example, they successfully predicted exam pass rates on a subset of the HumanEval dataset by extrapolating from models with 1000 times less computation:

The team believes that predicting future machine learning capabilities is important for security, and they are increasing their efforts in this direction.

Program for evaluating new versions of OpenAI

The OpenAI team created OpenAI Evals - a program for testing GPT-4 type models. It is used to improve models and track their performance (both to identify flaws and prevent regressions), and users can use it to track the performance of different versions of models (which will now be released regularly). Stripe has already used Evals to evaluate the accuracy of its GPT-based documentation tool.

Because all code is open source, Evals supports writing new classes to implement custom evaluation logic. The team hopes that Evals will become a means of sharing and crowdsourcing reference assessment templates, with the most useful templates included for all users of the platform.

API

To access the GPT-4 API (uses the same ChatCompletions as gpt-3.5-turbo) you need to register with waitlist.